NVIDIA is currently one of the most important corporate brands in the world. Thanks to its range of hardware and software innovations, the brand has managed to be one of the highest-grossing companies worldwide. Out of its wide range of offerings, NVIDIA’s nvlink bandwidth technology is an advancement under the same roof.

The NVLink bandwidth is expected to be a decisive step towards highly efficiency and effective computing. This bandwidth allows quick operations through faster data transfer between the CPUs and GPUs, and also within the GPU. This piece of tech is able to do so much just by offering a substantially higher bandwidth compared to traditional PCIe connections.

With that being said, let us dive directly into its details.

Being one of the most advanced technologies currently available, the NVLink incorporates a variety of complications and fundamentals that we need to take a look at:

As of now, you must be aware that NVLink offers in providing you with high-speed connectivity to the system, resulting in better data transfers. To achieve this faster communication speed, traditional interconnects are taken into use, such as Peripheral Component Interconnect Express (PCIe).

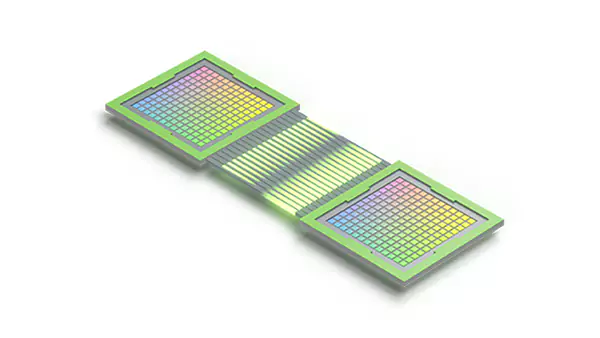

NVLink is also responsible for providing point-to-point connection architecture. NVIDIA has thoughtfully developed NVLink to utilize a bidirectional serial link that is a group of numerous individual links. Each link in this grouping can achieve a speed of up to 25 Gigabits per second, that too, in a single lane. The configuration typically consists of 4 to 6 lanes. This is how the bandwidth is capable of touching the total speed of 100 GBPS.

Recommend reading: What is NVIDIA NVLink

Moreover, with the “NVIDIA GPU Direct” feature, the GPUs of your computer will now be able to communicate independently.

DID YOU KNOW?

As mentioned earlier, NVIDIA is one of the most important companies in the world. You can get the idea by looking at some stats provided by investing.com, “NVIDIA’s revenue for 2023 was $26.974 billion, a 0.22% advance from 2022.

Its annual revenue for 2022 was $26.914 billion, which is a whopping 61.4% increase from 2021. NVIDIA’s annual revenue for 2021 was $16.675 billion, showing a 52.73% growth from the first year of the pandemic.”

High computing is itself one of the biggest advantages on its own that you get with the NVIDIA NVLink bandwidth. Apart from this, it is now pretty much clear how it helps in allowing the CPUs and GPUs to communicate with each other, and GPUs to communicate independently.

Since all these operations require a lot of power and speed, there are 4 to 6 lanes in each configuration, resulting in a total speed of more than 100 GBPS. Apart from this, here are some benefits listed below:

Speaking of NVIDIA DGX H100 superpods infrastructure, the role of NVLink is extremely pivotal. Thanks to its high-speed connectivity, huge bandwidth, and low latency, NVLink is the best match for supercomputing needs.

This way, all the GPUs in the DGX H100 system can communicate freely with each other and get integrated into each other. NVLink not only helps in enhancing performance but also allows the system to go beyond the limits of complex and demanding computational tasks.

The NVIDIA DGX SuperPOD is an AI data center infrastructure platform that uses NVIDIA’s DGX H100 system.

The big things that are going to shape the future of NVLink are NVLink 2.0 and upcoming 3.0 versions.

Both versions have their unique ability to interconnect GPUs with each other to maximize efficiency. And with newer innovations, it is obvious that you will get to enjoy more computational power and perform more complex operations.

With such high technology, working with AI, machine learning, and deep learning will further get easier to a noticeable extent.