Vector databases have become valuable tools for efficiently searching and retrieving information from vast collections of high-dimensional data. When you’re working on recommendation systems, image retrieval, or natural language processing, the performance of your vector database is necessary.

In this blog, we will explore key performance indicators to consider when evaluating vector databases, such as recall, precision, and latency. We will also delve into the importance of benchmark datasets and methods for comparing different vector search algorithms and databases.

When assessing the conduct of a vector database, two fundamental metrics stand out: recall and precision. These metrics provide insights into the quality and comprehensiveness of search results.

Recall measures the ability of vectors to retrieve all relevant items from a query. In other words, it answers the question, “Of all the items that should have been retrieved, how many were actually retrieved?”

A high memory indicates that the database successfully finds most of the relevant data points, minimizing the risk of missing valuable information. Recall also ensures that when you search for similar items, you are not excluding significant matches.

For example, in an e-commerce system, high recall ensures that when you search for a product, the database doesn’t miss out on potential matches, leading to better recommendations and user satisfaction.

Precision, on the other hand, focuses on the relevance of the retrieved items. It measures how many of the retrieved items are actually relevant to the query. It answers the question, “Of all the items retrieved, how many are truly relevant?” High accuracy ensures that the results are accurate and free from irrelevant noise.

In this database service, preciseness is necessary because it directly impacts user experience and decision-making. In applications like information retrieval or content recommendation, correctness means that the items shown to users are highly relevant, leading to increased user trust and engagement.

THINGS TO CONSIDER

Vector databases can store geospatial data, text, features, user profiles, and hashes as metadata associated with the vectors.

While recall and precision focus on the quality of search results, latency is all about speed. Latency measures the time it takes for the vectors to respond to a query. In real-time applications, such as voice command recognition or instant image search, low latency is relevant for providing a seamless user experience.

High-latency databases can lead to delays, frustration, and user abandonment. Therefore, when evaluating vector databases, it’s pivotal to consider both the quality of results and the speed at which these results are delivered.

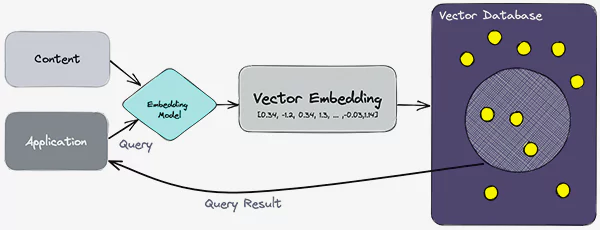

The diagram below shows how a vector database works to provide an accurate answer to any query.

To assess the efficiency of vector datasets, experts rely on benchmark datasets and methodologies. Benchmark datasets are collections of data points with known ground truth, allowing for objective evaluation. These datasets cover various domains and include image datasets like MNIST and CIFAR-10, text datasets like TREC and Reuters, and many others.

Benchmark methodologies define standardized procedures for evaluating the work of vectors. They outline the steps for conducting experiments, measuring KPIs, and ensuring fair comparisons between different algorithms and databases.

Benchmark datasets and methodologies serve several purposes:

1. Fair Comparison: They enable fair and unbiased comparisons between different vector datasets and algorithms. By using the same dataset and evaluation criteria, researchers and users can objectively assess which solutions perform better for specific tasks.

2. Progress Tracking: Benchmark datasets and methodologies allow the tracking of progress in the field. As new algorithms and databases are developed, experts can evaluate their output against established benchmarks, driving innovation and improvement.

3. Real-World Relevance: Benchmark datasets often reflect real-world scenarios, making the evaluation results applicable to practical applications. This ensures that the performance metrics align with the goals and requirements of the domain in which the vector database will be used.

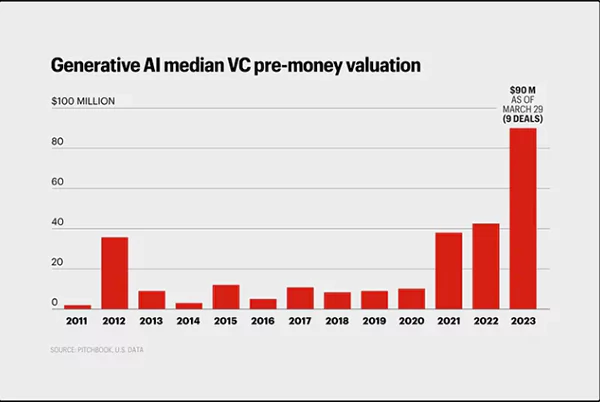

The demand for Generative AI median venture capital pre-money valuation has shot up exponentially after the pandemic as seen in the graph below. Small businesses and big brands both want to stay ahead and vector database is a valuable tool for them.

Evaluating the efficacy of vector databases ensures that they meet the requirements of specific applications. Recall, precision, and latency are key performance indicators that help assess the quality and speed of search results.

To conduct robust evaluations, benchmark datasets and methodologies provide a standardized and objective framework for comparing different vector search algorithms and databases.

In a data-driven world where the accuracy and efficiency of information retrieval are paramount, understanding how to evaluate vector databases empowers organizations to make informed decisions and opt for the right solutions for their needs.

By optimizing recall, precision, and latency while adhering to established benchmarks, businesses can provide better user experiences, enhance recommendation systems, and drive innovation across various domains.